Delixi Te is a study in how to mis-represent reality so that you can force it into the cherry-picked version of the Gospel you've decided to preach today. Pope Leo XIV has inadvertently highlighted exactly why it is increasingly difficult to take Catholic teaching seriously. Not only does he fundamentally alter the teaching, he points out why terms must be continuously redefined in order to keep the Church relevant.

13. Looking beyond the data — which is sometimes “interpreted” to convince us that the situation of the poor is not so serious — the overall reality is quite evident: “Some economic rules have proved effective for growth, but not for integral human development. Wealth has increased, but together with inequality, with the result that ‘new forms of poverty are emerging.’ The claim that the modern world has reduced poverty is made by measuring poverty with criteria from the past that do not correspond to present-day realities. [emphasis added] In other times, for example, lack of access to electric energy was not considered a sign of poverty, nor was it a source of hardship. Poverty must always be understood and gauged in the context of the actual opportunities available in each concrete historical period.” [10]

~Pope Leo XIV, Dilexi Te

TLDR: The Church must continuously redefine the meaning of the word "poverty" so as to make sure someone, somewhere, is always in poverty. Insofar as poverty is a moral issue, Pope Leo is advocating that Catholics become moral relativists. He has completely buried the idea of "being grateful for the things you have, the good things God gave you" and replaced it with the socialist politics of envy, where it doesn't matter how much you have, if someone else has more.

Poverty Is Not Socially Defined, Part I

Why the constant redefinition? Because the Pope doesn't understand basic economics. Lack of access to energy is not a "sign" of poverty, it is the cause of poverty. Cheap energy is the gateway to wealth. If you do not have access to cheap energy, you will never be wealthy. Period. Mic drop.

Leo, if you want to get rid of poverty - if you seriously want that - then you should be fighting tooth and nail to get small modular nuclear reactors (SMR) into every impoverished country in the world.

Nuclear power is now a corporal work of mercy. But Leo is as the babes, he knows nothing of how poverty actually works or how it is actually remediated.

Poverty Is Not Socially Defined, Part II

Because Leo doesn't undertand this, he doesn't realize that his assertion - poverty is socially defined - is fundamentally absurd. The most severe form of poverty, the deprivation of life itself, is the baseline for poverty. That baseline perdures regardless of century or social culture. A person needs a basic amount of food (2100 calories, plus vitamin and mineral micronutrients) and water to live, period. That minimum amount of food and water is an unchanging constant around the globe and across all the endless centuries. Food, water, life - this isn't hard. It isn't complicated. The criteria match across past, present and future.

So, let us use that baseline as the starting point for comparison. Life expectancy in the 1st century AD Middle East was generally low, averaging between 20 and 35 years at birth. 90% of the population died before they turned 55 or 60. Today, life expectancy in the same geographic region of the world is 77 to 80.

Wow. That doesn't help Leo's case at all.

Well, let's take a different tack. Let's look at how much labor it takes to avoid starvation. A first-century Middle Eastern peasant engaged in subsistence farming, that is, engaged in enough labor to avoid starving to death, would need to work approximately 8 hours a day, at least 250 days per year: roughly 2000 hours per year. Today, to achieve that same goal (i.e., not starving to death), the Middle Eastern laborer would have to put in roughly 22 hours per week, or 1144 hours per year.

Huh.

Apparently, poverty ain't what it used to be. (link)

And that forms the basis for Leo's dilemma. He MUST insist that poverty is socially defined, because if he doesn't, he would have to admit the truth: by first century standards, no one in the world today is poor. Even the poorest person today works only half as hard for survival-level rations. Worse, there is no more smallpox, rinderpest or polio. Leprosy is essentially unknown and easily treated when it does pop up. Malnutrition and famine are now created by malevolent or inept politicians, not by plague, locust or bad weather. We have become so wealthy around the globe, that poverty is now really just something politicians choose to inflict on populations by enforcing inept governmental policies.

Jesus was wrong about the mustard seed being "the smallest of all seeds." Similarly, if we judged physical poverty according to the standards of the world Jesus lived and preached in, then Jesus was also wrong about the poor always being with us. The poor, by His definition, are literally gone, disappeared, poof! no longer available for scrutiny. We don't have any Jesus-level poor with us anywhere and we haven't had for centuries. Obviously, that is decidedly not an acceptable conclusion.

This change in poverty began around 1800, when

the proportion of America’s population living below India’s poverty line was roughly as high then as it is in India today. The period 1850-1929 saw the poverty rate fall by some 20 percentage points. The US saw great progress against extreme poverty in this period. A few people have asked me for more details. Here they are, also extending the calculations to other rich countries.

Works of Mercy vs. Work of Politicians

Keep in mind, India and China together have a population greater than the rest of the world combined, so when the Indian and Chinese politicians substantially eliminated extreme poverty in their own nations, that was a near-miraculous change. Literally billions of people no longer live on the edge of starvation (link to maps).

But, this enormous, earth-shattering leap goes completely unremarked in Leo's exhortation. Instead, we get this:

27. For this reason, works of mercy are recommended as a sign of the authenticity of worship,

Sure. But what are these works of mercy? "Literally half of the work of the Church [the corporal works of mercy] is either already irrelevant or on the verge of being rendered irrelevant." Given that the Chinese atheist citizens and the pagan Hindus were the ones who wiped out extreme poverty for billions of their own fellow citizens, does that mean the people who participate in Hindu pagan rituals or Communist party jamborees are engaged in authentic worship? After all, how can bringing billions of people out of poverty not be a work of mercy?

Ignoring Other Causes of Poverty

But it gets worse. Seriously worse. In first-world populations, poverty is overwhelmingly generated by single mothers: women who have chosen to divorce their husbands (80% of the time) or who never bothered to marry their baby-daddy. [1,2,3] And just as politicians are now the major creators of general poverty, this single-mother poverty has largely been created by the Church itself. After all, as Pope Leo inadvertently points out, the Church has actively worked to destroy the very families She claims to desire:

71. Many female congregations were protagonists of this pedagogical revolution. Founded in the eighteenth and nineteenth centuries, the Ursulines, the Sisters of the Company of Mary Our Lady, the Maestre Pie and many others, stepped into the spaces where the state was absent. They created schools in small villages, suburbs and working-class neighborhoods. In particular, the education of girls became a priority. [emphasis added, barely]

Apparently, the Popes do not realize that educating women destroys total fertility rates and family formation. [cf., for instance: 1, 2, 3, 4, 5] The very thing the Pope celebrates in his first apostolic exhortation, female education, delays the formation, or destroys the stability, of the family, the "original cell of social life" (CCC 2207), thereby creating the very poverty that very same Pope excoriates.

Higher female education correlates with later marriage. Educated women have higher divorce rates. Educated women tend to have fewer children. Why? Well, because educated women improve child health/education outcomes. Fewer children die in infancy, so infant mortality falls. High infant mortality encourages high fertility rates, while low infant mortality removes the woman's felt need to have multiple children. [1, 2, 3, 4]

TLDR: if you want lots of large families with low divorce rates, you should not be educating women. These are two contradictory goals.**

And this problem is not limited to first-world countries. Oddly enough, though the UN recognizes single mothers as a cause of global poverty, stating:

Single mothers are particularly vulnerable in this respect and are over-represented in poverty statistics.

the Pope says nothing. Sub-Saharan Africa has the highest poverty rate in the world, with 19 of the 20 poorest countries found in that region. It is essentially the last major area in the world that still suffers from extreme poverty. According to UNICEF (2022), and Gallup,about 32% of the children in sub-Saharan Africa live with a single mother, the highest rate in the world. The children of Africa's single mothers are 1.7–1.8 times more likely to experience stunting and 1.3 to 2.6 times more likely to die before the reach five years of age. Between 25% and 35% of Sub-Saharan poverty incidence is correlated with single-mother household structures.

Yet, despite the lived testimony of literally every nation on earth concerning this major source of poverty, Pope Leo is completely silent on the subject of single-mother families. Not even a hint of this can be found in his apostolic exhortation. [1, 2, 3, 4, 5, 6] Instead, Pope Leo moves on from these early logical contradictions and an absolutely stunning silence about the problem of single motherhood, and turns instead to spouting outright fabrications:

90. The bishops stated forcefully that the Church, to be fully faithful to her vocation, must not only share the condition of the poor, but also stand at their side and work actively for their integral development. Faced with a situation of worsening poverty in Latin America, [emphasis added] the Puebla Conference confirmed the Medellín decision in favor of a frank and prophetic option for the poor and described structures of injustice as a “social sin.”

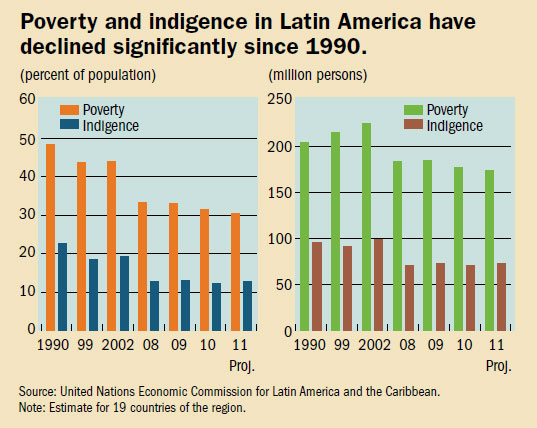

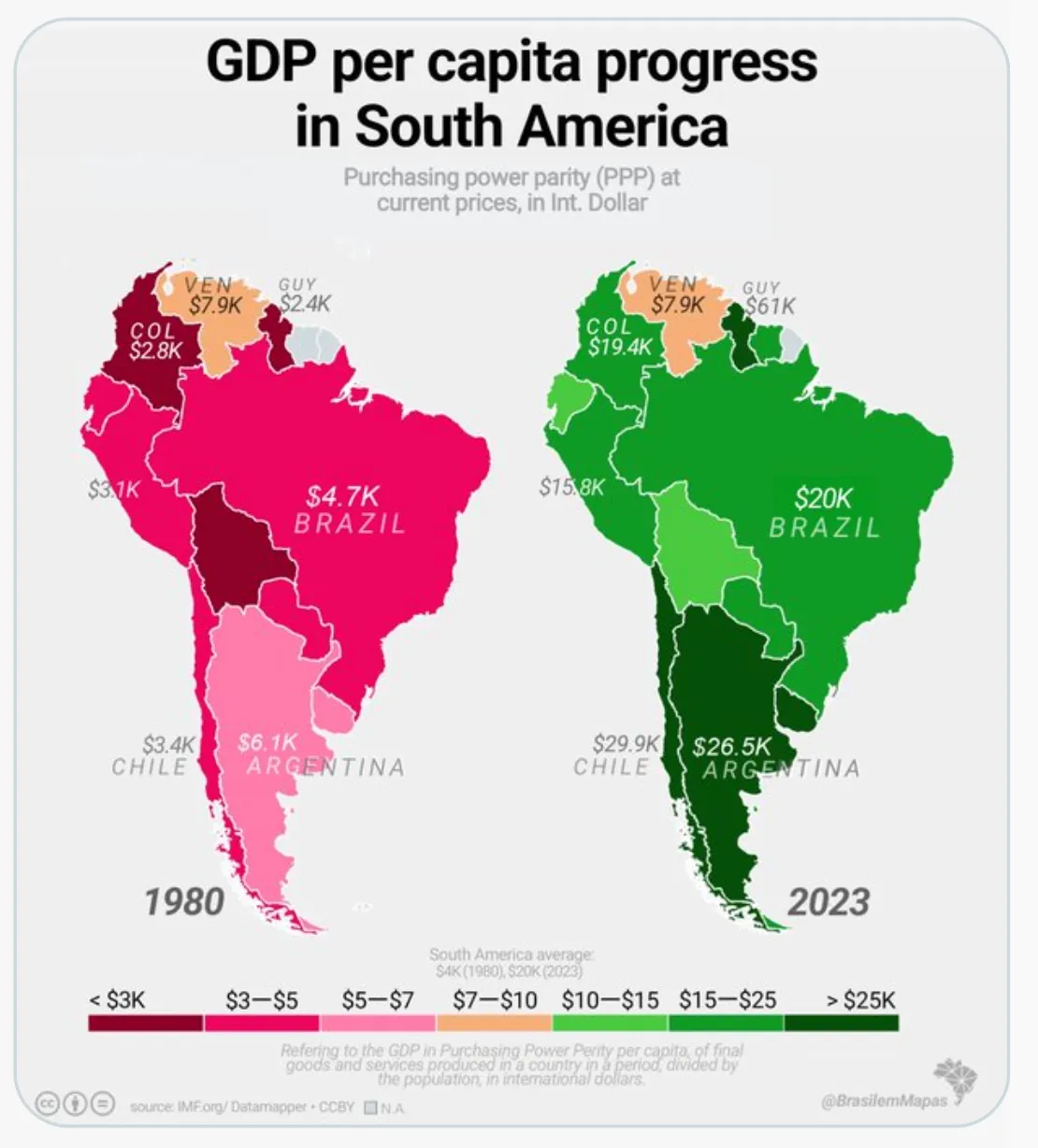

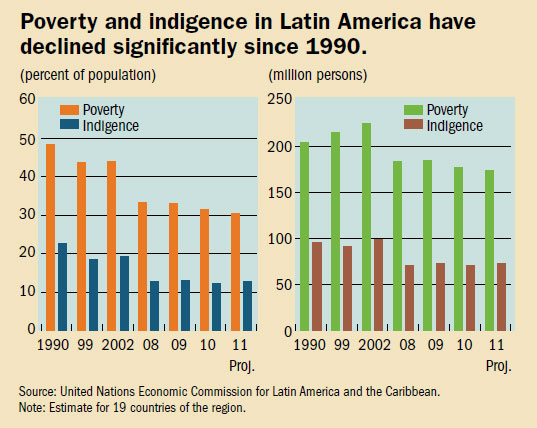

The Puebla Conference was held in 1979, over 45 years ago. While poverty may have been worsening in 1979, during a world-wide economic crisis, that crisis is also long-since past. Invoking it now, as if it were a real present danger, is simply a bald-faced lie. This is how poverty has changed since 1980. Maybe the Pope hasn't heard.

Now, you might argue that the Pope was just bringing forward an older example of a "structure of sin" created by injustice. But, for that to be true, he would have to demonstrate that the 1979 global economic crisis was intentional. That case is not made. 1979, the year of the Nicaraguan revolution, was not a good year for anyone. Exactly what "structure of sin" did the Pope or the bishops claim to have discovered? We never find out.

Nor does he mention how this whole social "structure" thing works. If those same people were later brought out of poverty, did they emerge due to a "structure of virtue"? If so, none of the bishops or Popes mention it. Which is strange, given the advances made against global poverty in the last 50 years. If poverty has decreased, doesn't that speak to increasing "structures of virtue" around the world? Shouldn't Pope Leo be lauding the good where it can be found?

You would think there would be room to praise the economic decisions and systems which have cut poverty to the bone. Instead, we get this: 92. We must continue, then, to denounce the “dictatorship of an economy that kills,” and to recognize that “while the earnings of a minority are growing exponentially, so too is the gap [emphasis added] separating the majority from the prosperity enjoyed by those happy few. This imbalance is the result of ideologies that defend the absolute autonomy of the marketplace and financial speculation...

95. As it is, “the current model, with its emphasis on success and self-reliance, does not appear to favor an investment in efforts to help the slow, the weak or the less talented to find opportunities in life.” [100]

One might ask if educating women necessarily teaches women an "emphasis on success and self-reliance", but let us pass by this question and address the new point Pope Leo has raised. The Pope complains of a growing gap between the rich and the poor. He seems completely oblivious to the growing gap between his "poverty porn" fantasy and the actual world his increasingly un-impoverished people inhabit:

What caused this extraordinary drop in the number of people experiencing extreme poverty? Hint: it wasn't the Catholic Church. Yet the Pope seems not only completely oblivious to this obliteration of extreme poverty, he actively denies the processes we know do help the poor:

114. At times, pseudo-scientific data are invoked to support the claim that a free market economy will automatically solve the problem of poverty.

Notice: the papal claim lacks any footnote supporting his bald assertion. But how could he support his claim? What Pope Leo asserts is simply false. Poverty has dropped dramatically, that is simply beyond question. It was and is free-market capitalism, and the legal structures which favor the protection of private property, which lifted the poor from their poverty. World Bank data estimates that land titling programs in Latin America increased household incomes by 20-50% for participants. [cf., 1, 2, 3, 4] :

"Without land tenure systems that work, economies risk missing the foundation for sustainable growth, threatening the livelihoods of the poor and vulnerable the most. It is simply not possible to end poverty and boost shared prosperity without making serious progress on land and property rights."

And keep in mind, not only is the Pope denying lived reality, he is arguably and actively contradicting Scripture, the Divine Word itself. After all, even the Apostles recognized the right to private property:

While it remained unsold, did it not remain your own? And after it was sold, was it not under your control? Why is it that you have conceived this deed in your heart? You have not lied to men, but to God. (Acts 5:4)

Notice, Peter did not take the line Leo takes. Peter did not chastise Ananias and Sapphira for having private property. Peter did not chastise either of them for refusing to share their private property with the community's poor.

Instead, Peter, the first Pope, expressly recognized and affirmed their right to do with their own property whatever they chose. At no point did he say that they sinned by refusing to share their wealth. Rather, Peter insisted their sin lay in pretense: they shared only a small portion of their wealth while pretending to have given everything. Their sin was in lying, not in refusing to give what they had to the poor.

How is it that neither Popes Leo nor Francis, nor even St. John Chrysostom, references this very telling passage when they discuss the plight of the poor and the duties of the wealthy?

Now, is poverty a problem? Certainly. Roughly 9% of the world still lives in extreme poverty, defined as a significant risk of starving to death. We must continue to work to end this. But this extreme poverty is largely the result of legal structures which do not protect private property, economies which are not built around capitalism, women who refuse to stay married to the father of their children, and government officials who do not enforce laws on private ownership, thus preventing the poor from protecting anything that they may manage to accumulate. Where private property laws are strong, poverty disappears. Where laws are weak, or weakly enforced, poverty grows. The Pope has no memory of these things. To whom can he appeal for succor?

Of all the saints who understand the need to protect the poor, and the importance of staying married to her husband, certainly the Blessed Virgin figures most prominently. Why then, does this Pope, alone among all the popes in the last several centuries, refrain from referencing the Blessed Virgin Mary in his closing panegyric on the poor?

You will search in vain through the apostolic exhortations and encyclicals of the previous several centuries for a papal document that closes without referencing or invoking the Blessed Virgin. The only such document that comes to mind is Mit Brennende Sorge. Pope Leo has now added his very first apostolic exhortation to this very short list of papal documents with BVM-free closings.

Which is sad. After all, doesn't that reflect in him a certain.... shall we say... poverty?

**And the goals are contradictory in part because the Church absolutely refuses to teach 1 Timothy 2:15. When discussing marriage, poverty and parents, Christianity calls on men to die for their wives. Men mostly do. But Christ apparently does not demand that women die for their husbands or children, so women mostly don't. Scripture says "a woman will be saved by the bearing of children", but absolutely no Catholic since the fourth century has invoked 1 Timothy 2:15. Both the Council of Trent's Catechism and the Universal Catechism of the Catholic Church studiously avoid mentioning that women are saved through the bearing of children (although both catechisms take pains to tell men that men need to die). Similarly, John Paul II's badly mis-named "Theology of the Body" discusses Ephesians 5:25 extensively while remaining totally, conspicuously, even suspiciously silent on 1 Timothy 2:15. You would think a theology of the body and human sexuality would somehow find time to mention how Scripture explicitly says a women's eternal salvation and everlasting communion with God is built around her having children via sex, but you would be wrong.

Well, what about Mulieris Dignitatem, on the Role and Dignity of Women? No.... no... try again...

How about the Second Vatican Council, especially its section on the Laity? Ahhh.... no, we're fresh out.

Absolutely no one... and I mean NO ONE... since Augustine and Chrysostom talks about 1 Timothy 2:15.

But the Catholic Church is the finest Scripture shop in the district.